I was running some benchmarks the other day. I usually have a lot of things running (Teams, Chrome, IDE, etc…) but I have to close everything to run some tight benchmarks.

When I am done, one of the first things I open again is Chrome and that’s when I realized, why does CPU usage goes up and the fan starts spinning as soon as I open Chrome? It should stay at zero. Chrome is extremely resources hungry when there are many heavy pages opened but I don’t have many tabs opened.

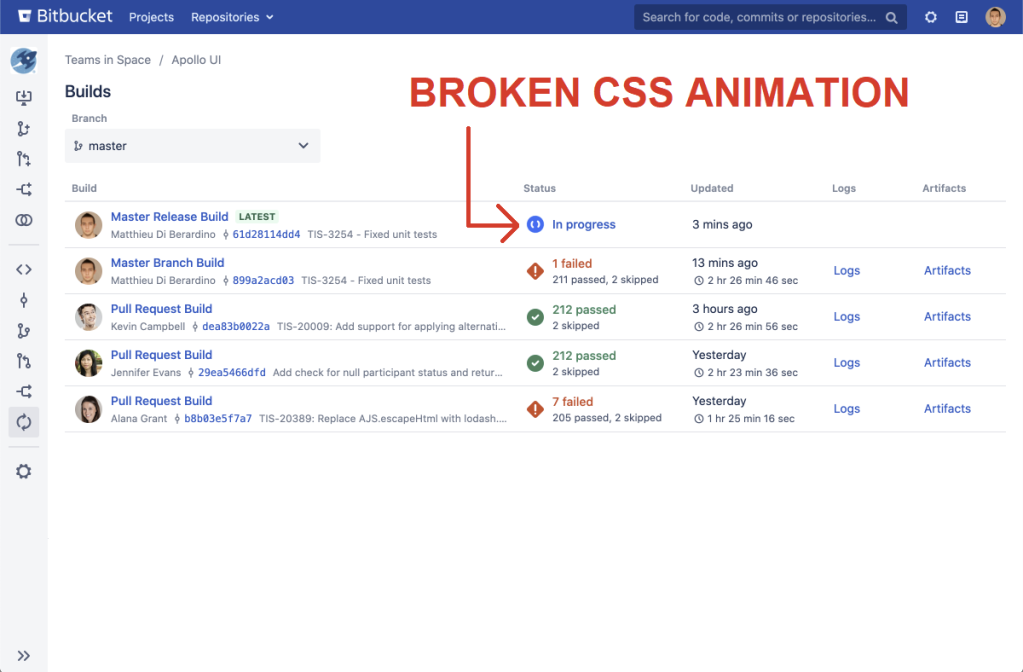

The only tab I have opened and set as my home page is BitBucket. Coincidence?????

Long story short, there is a buggy animation in the BitBucket web UI that’s wasting half a CPU core at all times when there is a build in progress. The issue is with the “build in progress” icon animation.

The icon is a static SVG image that’s about 3 lines of text.

The icon is rotated by a CSS animation.

.build-status-icon.build-in-progress-icon {

animation-name: rotate-animation;

animation-play-state: running;

animation-iteration-count: infinite;

animation-duration: 1.5s;

animation-delay: 0s;

animation-timing-function: linear;

transform-origin: center;

}The CSS animation has terrible performance characteristics.

You can open Chrome monitoring tools with Shift+Escape.

- When the animation is running, whenever a build is in progress, chrome shows the tab is using 30% to 70% of a CPU and the GPU is spinning near 90%.

- When removing the animation (tip: remove the css or set status to “paused”), the CPU sits near 0% and the GPU near 0%.

- The CPU spikes occasionally, the page is polling the server periodically to update PR and build status. That part is normal.

Numbers on a laptop, you could get a third of that if you have better hardware than me (tip: use a desktop). I’d love to say this is an old CPU running on a low frequency in power saving mode, but not quite, this is the most expensive laptop in the manufacturer line up with the most expensive CPU available, the laptop can’t scale down frequency because of BitBucket and Slack requesting and wasting too much power, the laptop can’t really scale up frequency because of overheating.

That is to conclude: bad software running on bad hardware.

If you are curious for more

That’s all for today unless you are curious for more details. Personally, I was definitely curious how a seemingly trivial animation can be so slow?

The Chrome performance tools have a timeline showing the time to render each frame. Target is 60 frame per second, or 16 ms per frame.

- The timeline shows 6 ms to render when the animation is running.

- The timeline shows only 1 ms or 2 ms to render when the animation is removed.

The computer is doing a lot more work and recomputing the layout of the entire page. One thing I do not understand though is why Chrome is showing full GPU usage? Where does GPU usage go to?

One thing that is remarkable, the icon is displaying a circle, but the icon image is really a square with transparent corners (inside a div container that is a rectangle).

A circle can be rotated in place. It doesn’t take more space than the initial circle. A square cannot.

Did I say the “build in progress” circle is not a circle? The circle is a square! It’s a square!

Rotating a square cannot be done in place. Let me give one quick picture to illustrate.

Hopefully that’s good enough to explain that a square picture is getting out of bounds as it rotates. In this basic example, the table does not resize to fit the rotated image and the image overlaps on other content.

In a real world app that is responsive and highly dynamic, like BitBucket, the page is usually adjusted to fit content, neighboring elements get pushed out to fit the rotating image, then neighboring and parents elements get adjusted too… the layout of the entire page is redone on every frame because of the rotating image.

You can see for yourself if you have the software. You can highlight elements (like in the screenshot above) with the developer tools and you will be able to see invisible containers getting resized in real time. I think that’s what the CPU is busy doing most of the time.